TL;DR

Two years ago, I delved into the cryptocurrency scene as an investor and encountered a frustrating lack of reliable investment advice. I detailed this issue in this blogpost.

Last year, I worked on a Go-based project aiming to automate the tracking of cryptocurrency predictions on social networks, as discussed in this blogpost. While the system was mostly automated, a manual step of inputting predictions remained.

Recently, the emergence of LLMs based on generative AI provided an opportunity to fully automate it. This blog post outlines the implementation of this automation.

Introduction

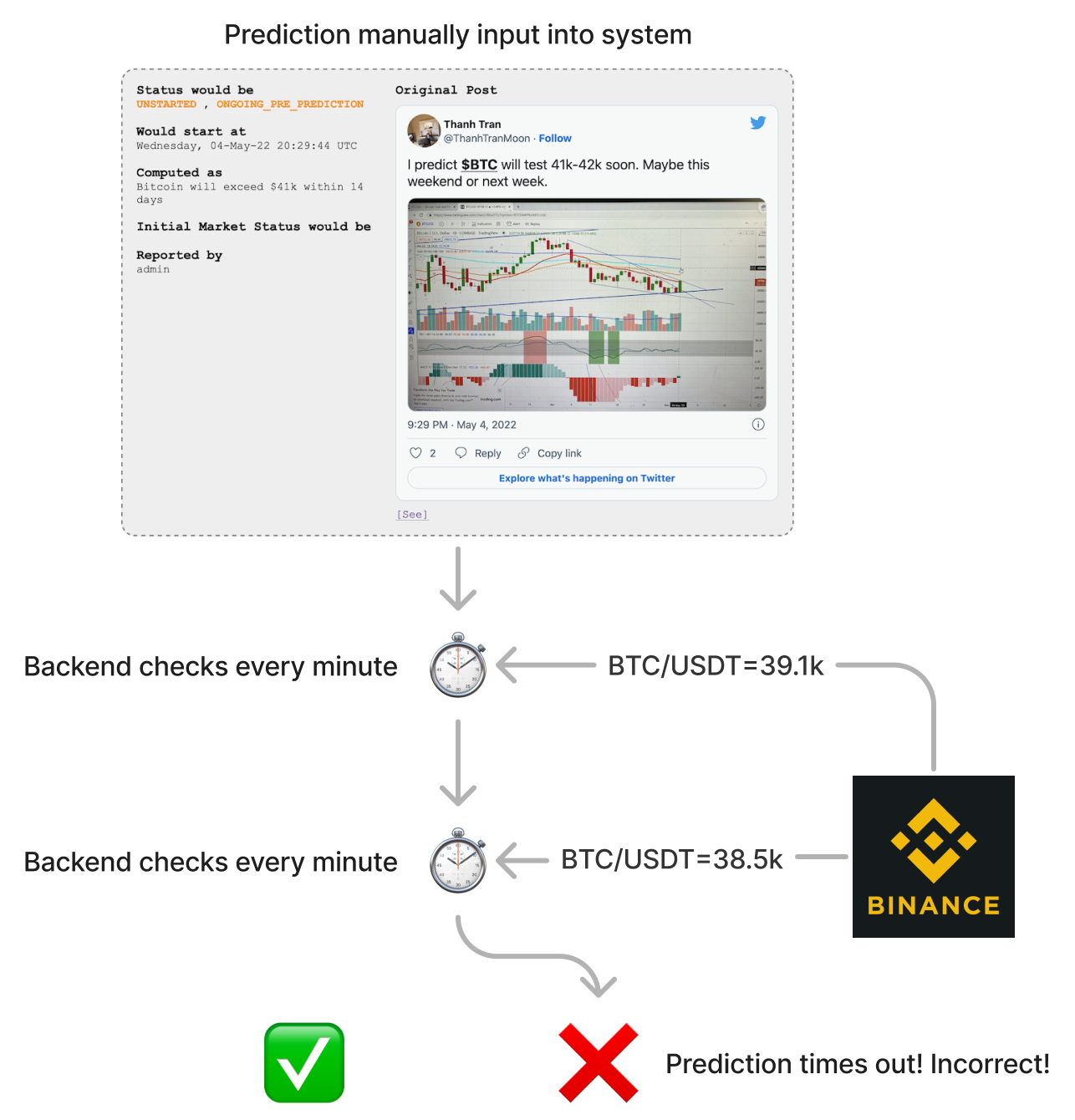

For a detailed understanding of the system, please refer to this blogpost. Here’s a simplified explanation:

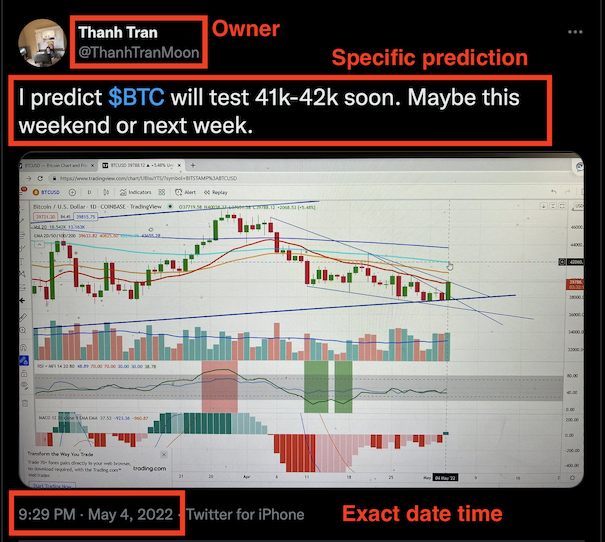

A prediction on a social network, like the example below, typically includes a date, an owner, and mostly uneditable content:

At a high level, automating prediction tracking involves three main steps:

How can we automate the manual step of inputing predictions?

Automating input

Automating the input part comprises three distinct parts:

- Finding predictions

- Transforming prediction content into a structured form

- Inputting the structured prediction into the system

Finding predictions

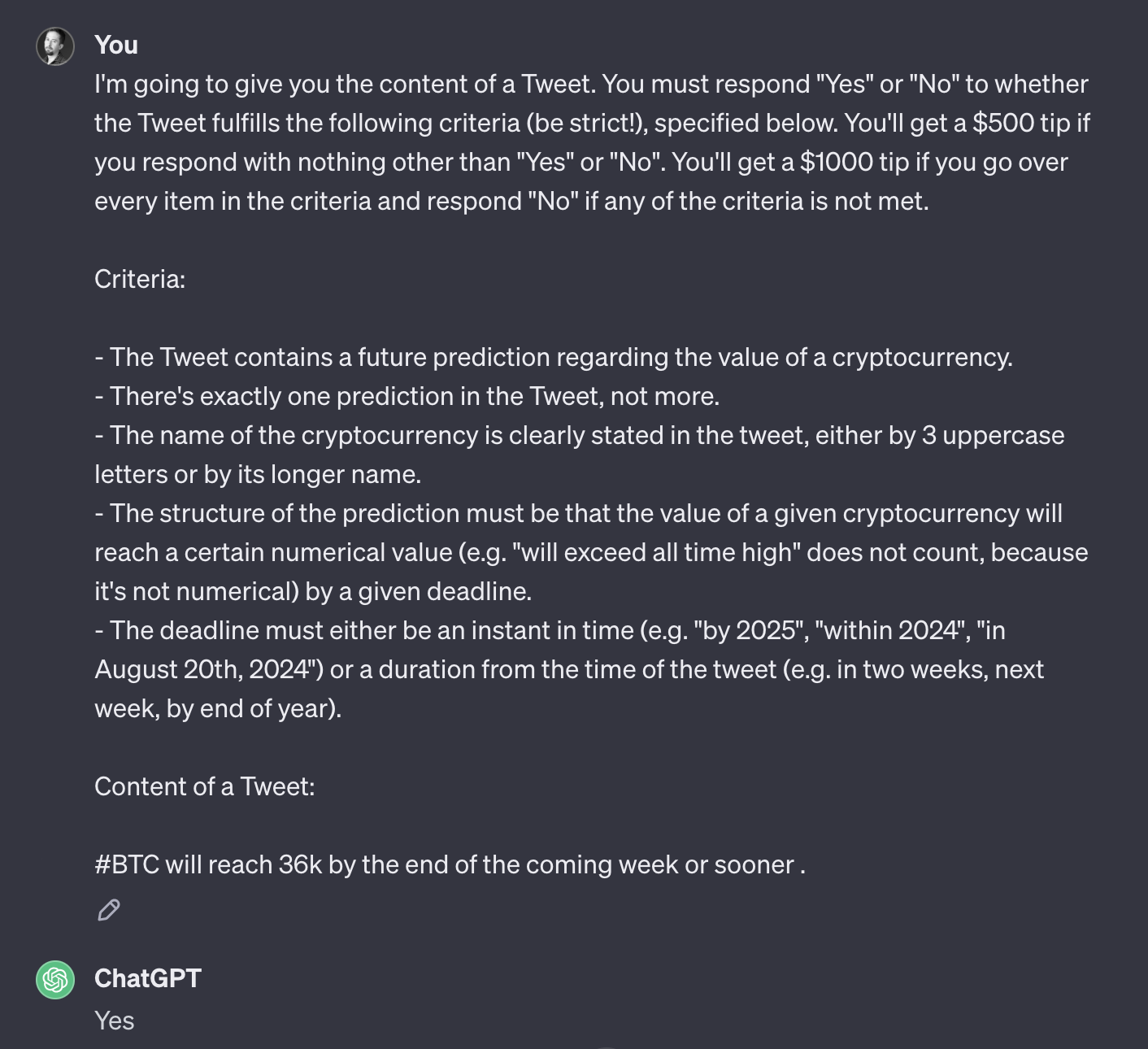

The manual process involves compiling a clever list of search terms on Twitter, running each one, analyzing resulting tweets, and inputting trackable predictions. Can this analysis be automated using, for instance, ChatGPT?

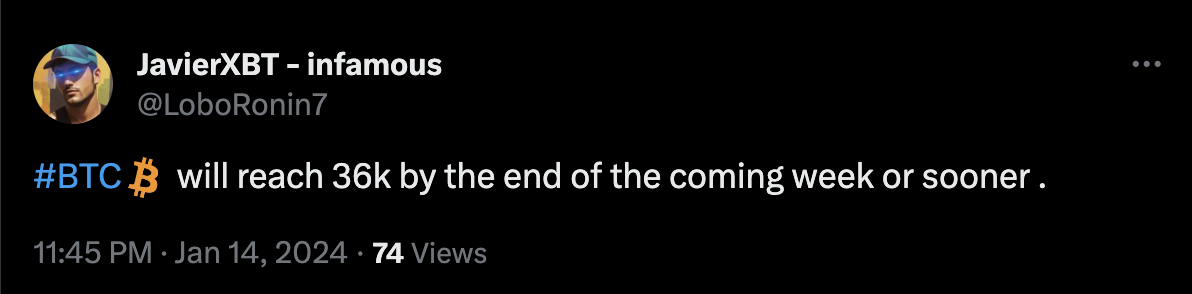

Examining this example tweet:

A well-iterated prompt seems effective:

Ok, that works!

If we can do it for one tweet, we can certainly repeat it for a million of them. The only thing we need is a script that:

- Keeps sniffing Tweets on the Internet

- Dedupes

- Feeds each unique tweet to this prompt on ChatGPT4’s API

- Sends the candidates to step 2 (transforming into structured prediction)

The only issue is that Elon started charging $100/month to use their read API. The only solution I’ve found is to get a Twitter login, and then build a puppeteer script that logs in, runs the searches and extracts the tweets. Sorry, Elon, but this is unreasonable for a PoC; I’m not gonna DDoS your platform.

Transforming tweets into structured predictions

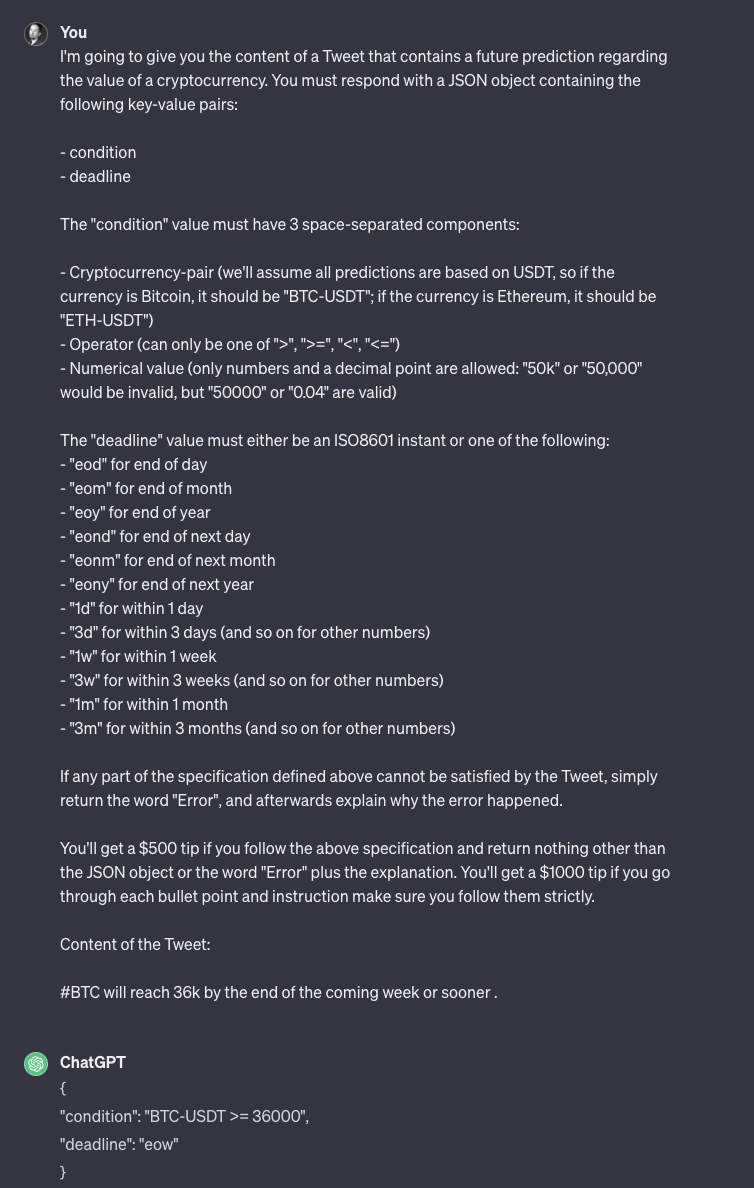

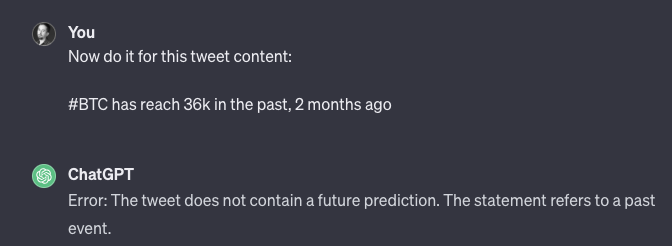

Before LLMs, automating this step was impossible. The prompt for transforming tweets looks promising. Let’s try it on an example:

Perfect!

Inputting predictions into the system

This step should be trivial, since I separated the API from the BackOffice anticipating other integration use cases.

Conclusion

The practical applications of Generative AI have surpassed my initial expectations, opening doors to use cases I once deemed impossible. While I may not proclaim absolute trust in the system’s full automation capabilities at this point, it has reached a stage where I can comfortably perform a daily one-minute check, allowing me to efficiently review automated predictions.

The success of this project has ignited a sense of enthusiasm within me. I feel like integrating LLMs into all my future projects.